2020. 2. 12. 15:22ㆍ스터디/Projects

What is a Data Pipeline?

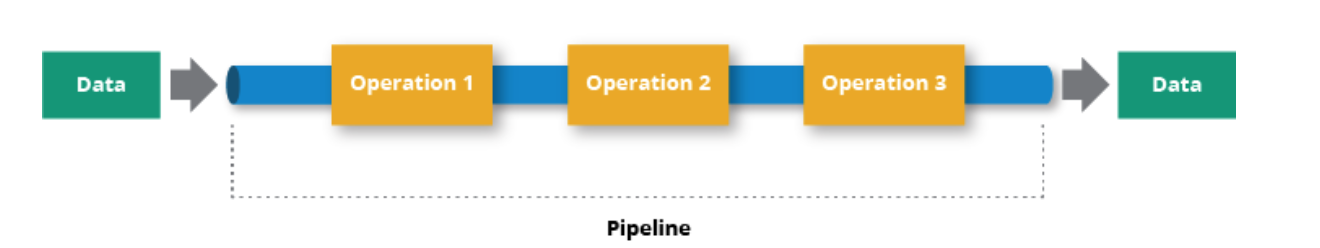

A data pipeline is a series of data processing steps. Data pipelines consist of three key elements: a source, a processing step or steps, and a destination. In some data pipelines, the destination may be called a sink. Data pipeleines enable the flow of data from an application to a data warehouse, from a data lake to an analytics database, or into a payment processing system.

Microservies are moving data between more and more applications, making the efficiency of data pipelines a critical consideration in their planning and development. Data generated in one source system or application may feed multiple data pipelines, and those pipelines may have multiple other pipelines or applications that are dependent on their outputs.

For example, consider a single comment on social media. This event could generate data to feed a real-time report counting social media mentions, a sentiment analysis application that outputs a positive, negative, or neutral result, or an application charting each mention on a world map. Though the data is from the same source in all cases, each of these applications are built on unique data pipelines that must smoothly complete before the end user see the result.

Common steps in data pipelines include data transformation, augmentation, enrichment, filtering, grouping, aggregating, and the running of algorithms against that data.

What is a Big Data Pipeline?

As the volume, variety, and velocity of data have dramatically grown in recent years, architects and developers have had to adapt to "big data". The term "big data" implies that there is a huge volume to deal with. This volume of data can open opportunities for use cases such as predictive analystics, real-time reporting, and alerting, among many examples.

Like many components of data architecture, data pipelines have evolved to support big data. Big data pipelines are data pipelines built to accommodate one or more of the three traits of big data. The velocity of big data makes it appealing to build streaming data pipelines for big data. Then data can be captured and processed in real time so some action can then occur. The volume of big data requires that data pipelines must be scalable, as the volume can be variable over time. In practice, there are likely to be many big data events that occur simultaneously or very close together, so the big data pipeline must be able to scale to process significant volumes of data concurrently. The variety of big data requires that big data pipelines be able to recognize and process data in many different formats-structured, unstructured, and semi-structured.

Data Pipeline versus a ETL

ETL refers to a specific type of data pipeline. ETL stands for "extract, transform, load". It is the process of moving data from a source, such as an application, to a destination, usually a data warehouse. "Extract" refers to pulling data out a source: "transform" is about modifying the data so that it can be loaded into the destination, and "load" is about inserting the data into the destination.

ETL has historically been used for batch workloads, especially on a large scale. But a new breed of steaming ETL tools are emerging as part of the pipeline for real-time streaming event data.

Data Pipeline Considerations

Data pipeline architectures require many considerations. For example, does your pipeline need to handle streaming data? What rate of data do you expect? How much and what types of processing need to happen in the data pipelines? Is the data being generated in the cloud or on-premises, and where does it need to go? Do you plan to build the pipeline with microservices? Are there specific technologies in which your team is already well-versed in programming and maintaining?

Architecture Examples

Data pipelines may be architected in several different ways. One common example of a data pipeline architecture is a batch-based data pipeline. In a batch data pipeline, you may have an application such as a point-of-sale system that generates a large number of data points that you need to push to a data warehouse and an analytics database.

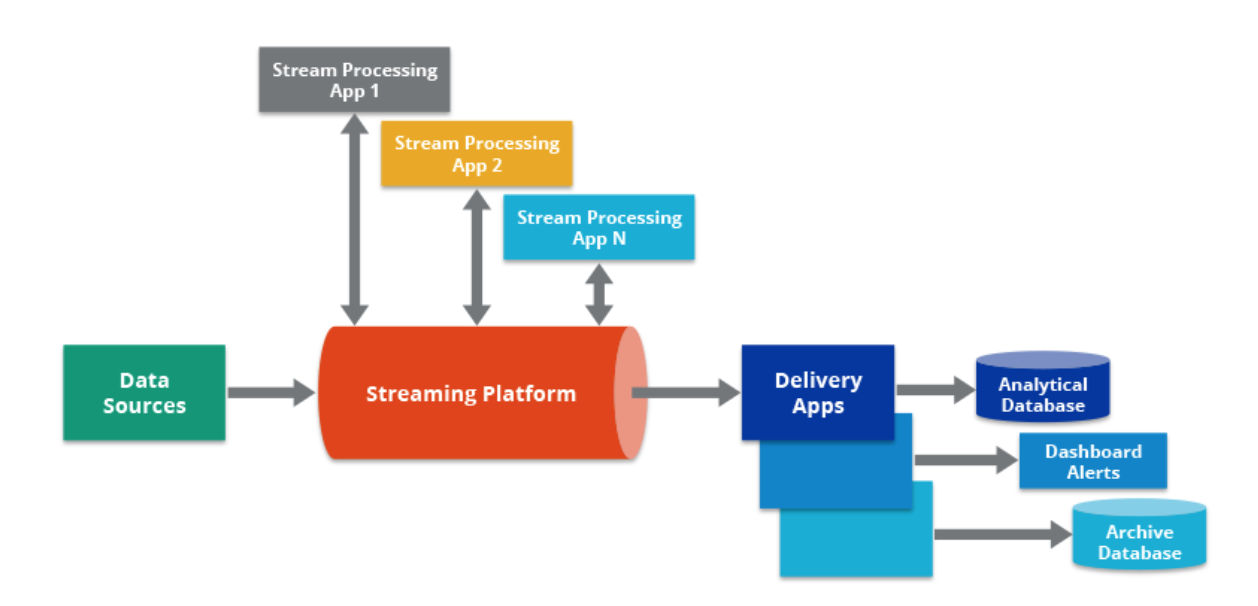

Another example of a data pipeline architecture is a streaming data pipeline. In a streaming data pipeline, data from the point of sales system would be processed as it is generated. The stream processing engine could feed outputs from the pipeline to data stores, marketing applications, and CRMs, among other applications, as well as back to the point of sale system itself.

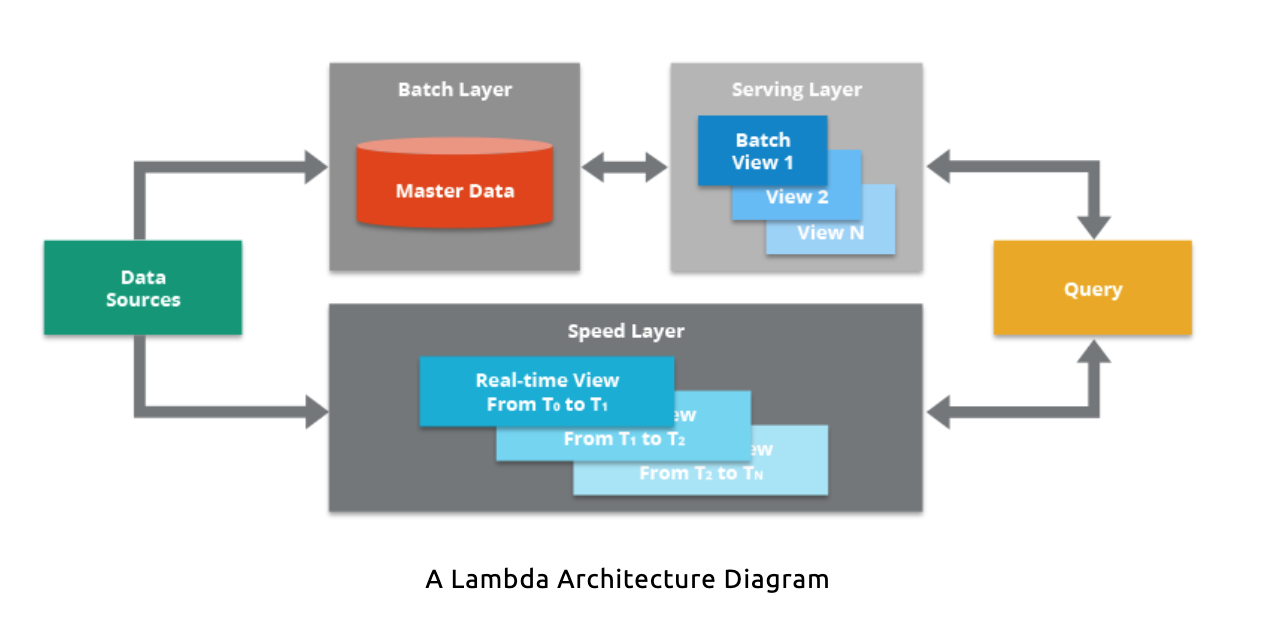

A third example of a data pipeline is the Lambda Architecture, which combines batch and streaming pipelines into one architecture. The Lambda Architecture is popular in big data environments because it enables developers to account for both real-time streaming cases and historical batch analysis. One key aspect of this architecture is that it encourages storing data in raw format so that you can continually run new data pipelines to correct any code errors in prior pipelines, or to create new data destinations that enable new types of queries.